Tesla’s AI Day took place on August 19th, 2021. You can find the official YouTube link here, and Lex Friedman has a good summary here. These are my observations, completely as a non-technical person. Remember, this was a recruiting event for talent in these areas: computer hardware, neural networks, autonomy algorithms, code foundations, evaluation infrastructure, and the Tesla Bot.

YouTube has broken out the following time breaks.

- Minute 47: AI Day begins

- After one hour and 45 minutes: DOJO

- After two hours and 5 minutes: Tesla Bot, aka Optimus Subprime

- After two hours and 13 minutes: Q&A with the team

The first thing we notice is a Tesla vehicle using Full Self Driving beta completing a drive. What strikes me is not the car driving. It’s the visualization of FSD on the main screen. The car sees pedestrians, bicyclists, stop signs, traffic lights, leans into curves, navigates tight streets, and makes numerous left turns. We’ve seen all this before. What’s cool is the science of how Tesla made it happen. That’s the purpose of AI Day.

Andrej walks us through how Tesla moved from single-image vision from each camera to videos captured from multiple cameras and inserted into the neural net programming.

Observation: The incredibly hard work put in to switch from single-image training to the multi-video system provides significantly better predictions than a single camera.

At one hour and 13 minutes, Ashok Elluswamy tells us the goal of planning is to maximize safety, comfort, and efficiency. An example is given of a car in the right lane having to change two lanes to the left. The car goes through 2,500 simulations in 1.5 ms, while considering the position, motion, and speed of surrounding cars. It considers slowing down, speeding up, and finally settles on one option balancing safety and comfort while making the turn.

Observation: This is phenomenal. A person will use judgment and prior experience on the best way to change two lanes. We see our autonomous car going through many scenarios to ensure we stay on track with our navigation, similar to what a person would do. Here the car lets the car on the left pass, changes lanes, and quickly changes again to arrive at the light.

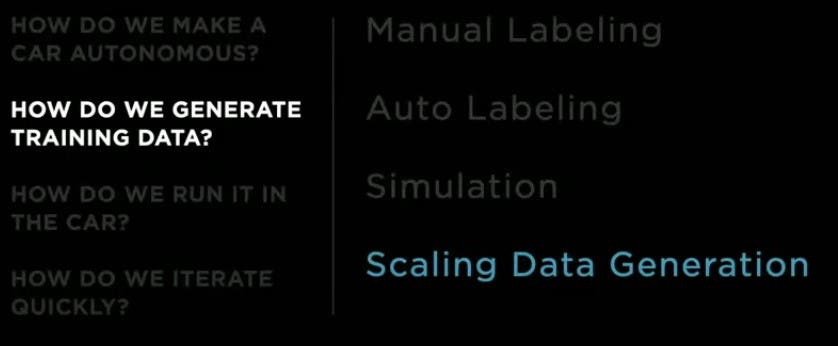

Andrej comes back at 1 hour and 24 minutes to talk about manual labeling. He talks about having clean data to train the neural nets in vector space. He mentions Tesla has a 1000-person strong in-house data labeling team. They quickly went from 2-dimensional labeling to 4-dimensional space + time labeling. If you label something once, the label is remembered and added to all cameras across many frames. This is a big time savings from having to label the same item multiple times. Manual labeling is too slow, and leads into our next section on auto labeling.

Observation: I don’t know how other autonomous companies are doing it, but manual labeling won’t work — it’s too time and labor intensive.

Ashok is back at 1 hour and 28 minutes to talk about auto labeling. He mentions that the amount of labeling is significantly more than what the human labelers can do. This is where auto labeling comes in. Video clips of 45 seconds to 60 seconds are used for auto labeling. I’ll be honest, a lot of the terms and math involved in the details went over my head with a whoosh. What’s cool to me is how Tesla can use different vehicles over different trips to help auto label significant features. Tesla can “cheat” a bit and see the past and present history of occlusions (or how I see it: a vehicle passes in front of a pedestrian, but keeps track of the pedestrian after the vehicle passes) to generate faster auto labels. Road texture, walls, barriers, static objects, moving objects through occlusions, pedestrians, motorcycles, and parked cars are some of the things generated by auto labels.

Observation: The end of this segment shows how Tesla was able to use auto labels to remove radar within 3 months. This is phenomenal, and from the examples given, vision-only plus auto labeling is an improvement on tracking and planning compared with vision plus radar. 10,000 clips were collected and automatically labeled within a week to clean up vision-only. It would have taken humans several months to do the same thing. I can only imagine how much of a disadvantage other auto companies are in by not having numerous video clips, and by not having an auto labeling pipeline that condenses months of work into a week. Ashok seamlessly pivots from auto labeling to simulation.

Simulation starts at 1 hr and 35 minutes. Simulation helps in three areas: where data is difficult to source (they use people chasing a dog in the middle of a highway — ha!), difficult to label (a swarm of people in a big city), and closed loop (such as having the vehicle park in a parking lot). This was a reason for Tesla to show off a Cybertruck in the simulation. At first glance, the simulation was so good that I thought it was reality. You have to slow it down or pay close attention to notice some things are off in the render.

As Johnna mentioned , the Cybertruck is a player in the simulation game. The simulation uses perfect labeling in the vector space. To make the simulation as realistic as possible, Tesla needs accurate sensor data, photorealistic rendering (no aliasing, and funny example of 10 police vehicles making a left), diverse actors and locations (including 2000 miles of hand-built roads), scalable scenario generation and scenario reconstruction. Tesla has used simulation to improve pedestrian, bicycle, and vehicle detection & kinematics. On their to do-list are general static world, road topology, more vehicles and pedestrians, and reinforcement learning. Ashok passes it off to Milan to discuss scaling data generation.

Observation: People think Tesla removed radar on a whim to reduce costs. I’m sure that might be a fringe benefit, but the real reason was to improve how the car interacted with objects on the road. That Tesla was able to run the simulations necessary within 3 months to remove radar, and improve the results, shows this was a thoughtful process. I don’t know of many or any companies that could do the same within 3 months. The simulation layer is an amazing achievement of blending what the cameras see with how they should respond. With the combining of the highway and city neural nets, we’ll continue to see tremendous progress on FSD Beta. If you look at the AI page, there is no shortage of work to do. That to me means that FSD Beta will be incomplete until those areas are tackled. Or, the optimistic way of looking at it, the safety level will continue to improve with each simulation update.

A focus on hardware integration starts at 1 hr 42 min. Scaling data generation was briefly covered by Milan. Of key note was the next topic, “How Do We Run It In The Car.” We see the neural networks and algorithms work together with the FSD Computer to minimize latency and maximize the frame rate. Only one of the SoCs outputs vehicle commands, and the other one is used as an extension of compute. Milan continues on “How Do We Iterate Quickly.” The numbers are impressive. One million runs a week, 3 datacenters plus the cloud, more than 3,000 Autopilot computers, Bit perfect evals on real FSD AI Chip hardware, and custom job scheduling and device management software. Tesla has more than 10,000 GPUs to train the network. Milan says this is not enough, and hands off to Ganesh, Dojo, and the next step.

Observation: My thoughts are that this is another area where vertical integration helps Tesla. It’s having hardware and software work seamlessly together, which reminds me of Apple. This is going to be hard for old auto manufacturers to compete with. The hardware they are used to are internal combustion engines and related parts. Software was given to third parties, and user interface software lags behind Apple and Google. Forcing them to vertically integrate and attract the talent needed will be a large, multi-year task. The advantage will be to new EV companies that can incorporate hardware and software together from the start. I was curious to find that Tesla has on-premise datacenters, which is not what I would have expected from a company that moves so fast.

Ganesh and Dojo start at 1 hr and 45 min, and continues until 2 hr and 5 minutes. We have covered Dojo in four parts here, here, here, and here. I’m only going to list my observations. Observation: The next generation of compute training will be impressive, with Dojo somewhere in the top 10 most powerful supercomputers in the world, depending on how it is measured. Tesla has worked to eliminate problems with heat, network bandwidth, computing power, and compiler software using a first-principles-based approach. If people thought Tesla only assembled vehicles, sold batteries, and sold energy-generation products, the work on Dojo shows it actually has the chops to compete with the best in Silicon Valley, and the world. This is a frightening thought for any potential Tesla competitor. Tesla is aiming to recruit in massive numbers, and the best people. There are many problems to solve. Imagine Tesla using Dojo to re-orient its Austin factory for improved performance. I wonder if Tesla will use Dojo to enter the HVAC market, and for more advanced systems combining their batteries, solar, and EVs. Could Dojo be used for protein-folding simulations? I don’t know. Tesla is not shy about solving big problems. Tesla will continue creating better factories and better vehicles, fast. It’s enough for an auto exec to pop Pepto on a daily basis. To top it all off, Tesla has identified ways to scale the monster known as Dojo by another 10× in performance. Wow.

Final thoughts: Wall St. analysts, even the bullish ones, think Tesla was showing off by discussing the Tesla Bot. They are worried about competitors, China, and vehicle growth. I am sanguine to their short-term concerns. This event was to recruit the best people to solve the hardest problems over the coming three to five years. Having the best people gives a good chance at fending off competitors and growing vehicle sales. Tesla is a serious AI company. They are using AI to make their factories, EVs, and renewable energy offerings smarter. They can easily apply that AI to other horizontal opportunities. To see Tesla as only an auto company is missing the mark. To be concerned about Tesla’s short-term growth opportunities misses the mark equally as bad.