In the days after the supposed “Autopilot” crash in Texas, we saw a lot of garbage floating around. Pretty quickly, we found out that Autopilot wasn’t even on, but relying on a local official’s assertion that they were “100% certain” nobody was in the driver’s seat, most outlets kept running with it. Then yesterday, we found out that there may have actually been someone in the driver’s seat. Throughout all of the bad information and driveby coverage, I kept seeing this talking point come up: That it was foreseeable that people would abuse Autopilot and that Tesla should do more to prevent that.

After looking into this idea further, it’s pretty clear that trying to apply such a standard is likely not only unreasonable in the courts, but wouldn’t make a good basis for regulatory action.

Even The “Reasonably Foreseeable Abuse” Legal Standard Has Limits

Legally, there’s a lot of contradictory and messy case law that revolves around this standard. I’m not a lawyer, but I found a blog post by a law firm about the topic that explains it pretty thoroughly.

“Under a reasonable foreseeability standard, a manufacturer generally may be held liable for injuries caused by a product even when a consumer failed to use the product as intended. In these circumstances, the consumer would need to show that the risk was caused by a reasonably foreseeable misuse that rendered the product defective and was known or should have been known to the manufacturer when it sold the product.”

The problem with this standard arises when courts try to apply it. Later in the blog post, they tell us about how inconsistently it is applied. Even when faced with nearly identical circumstances, courts in different states will rule in completely opposite ways. For example, some states hold a soda machine manufacturer liable when some moron tries to shake the machine to get a free soda and gets injured, while other states don’t see it as a risk that the manufacturer could reasonably be held liable for.

They go on to explain that there is also a reasonability standard here. Foreseeable abuses of a product that aren’t reasonable (at least in theory) shouldn’t fall on the shoulders of the manufacturer. Fortunately for those of us who cook, even the deadly misuse of kitchen knives has been seen as an unreasonable abuse. Efforts made by a manufacturer to make abuse harder or less enticing (Tide Pods are a great example here) can also be considered toward reasonability.

In other words, blatant and extreme misuses of a product aren’t the responsibility of the manufacturer. In those cases, the person knows very clearly that they aren’t using the product correctly, and thus assumes responsibility for their own actions.

Autopilot Abuse Probably Falls Under Unreasonable Abuse

While we know at this point that Autopilot wasn’t used in the wreck that occurred near Houston, we do know that some people (including some journalists) abuse the technology. What’s more important than the abuse is what it takes to get to the point of abuse and whether that abuse was blatantly unreasonable.

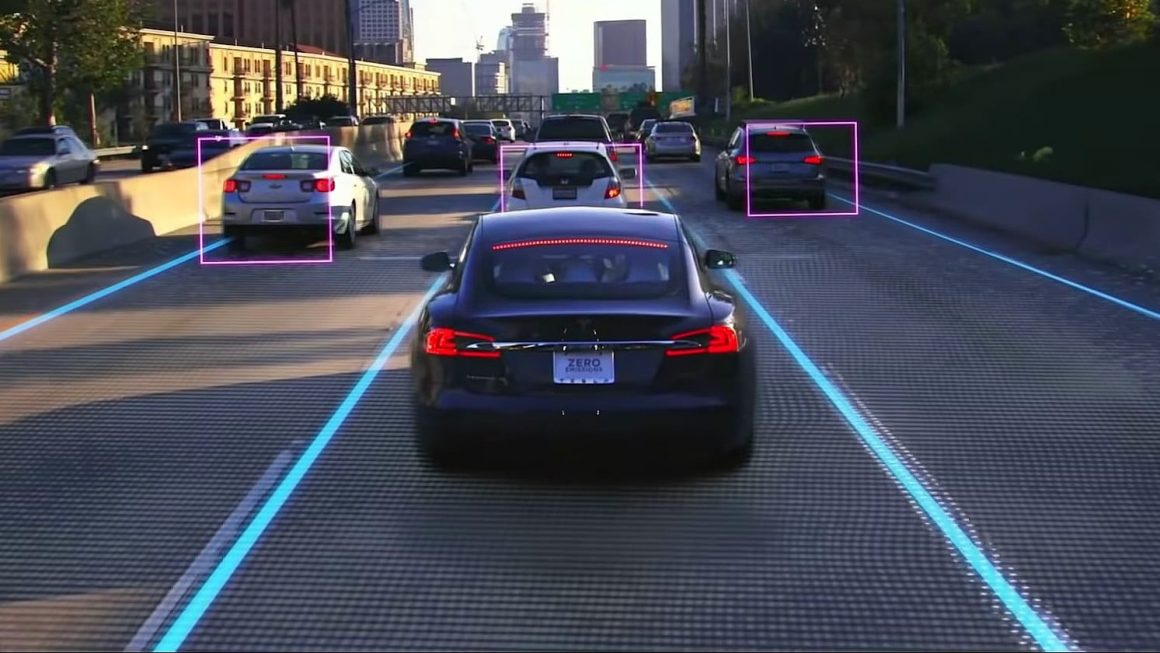

First off, to abuse Autopilot you have to do something about the wheel sensor, and for those who haven’t tested Autopilot out yourself, this is how it behaves if you don’t keep the weight of your arms and hands on the wheel.

Consumer Reports hung some objects from the wheel. There’s also the Autopilot Buddy “cell phone holder” that provides enough weight to keep Autopilot from knowing there isn’t a hand on the wheel. Other people just buy strap-on exercise weights and attach those to the wheel.

This might sound like it’s too easy, but keep in mind it takes an intentional decision to disable this safety feature.

Second, if you want to completely leave the driver’s seat, you’ve gotta trick the car into thinking the seat belt is still buckled. This can be done by buckling the belt in before you get in and sitting on top of it, or by buying a fake seat belt end and buckling it in.

This is now the second intentional decision one must make to trick the system.

As I said earlier, I’m not a lawyer, but it seems to me that Tezzla took steps to prevent the abuse that requires a driver to very knowingly and willfully bypass them if they want to abuse the system. That behavior (bypassing the safeties) would be seen as unreasonable and highly negligent by a reasonable person.

Really, responsibility should fall to the person unreasonably abusing Autopilot.

The Push To Use The Regulatory State To Bypass This

The people pushing a “foreseeable abuse” standard probably know that, at least in civil court, there’s a limit to this doctrine (the abuse must not be unreasonable). Knowing this limitation, they want to see if they can impose it without that protection. Their strategy? To get federal regulators to use the rulemaking process to impose it instead.

Some argue that Tesla should be forced to use monitoring cameras with Autopilot. I understand the idea, but as we know, sunglasses have proven to be a problem with some of these systems. I don’t know about you, but it’s a pain to keep eyes on the road without sunglasses. Also, don’t think for a second that people won’t find a way to abuse their way around a monitoring camera the same way they bypassed the other features.

Speaking of glasses…

If they choose to impose a “foreseeable abuse” standard without mandating cameras, that’s even worse. As we’ve seen above, there’s a lot of room for interpretation that leave automakers unsure of whether they’d even be in compliance. Even with driver monitoring cameras, would the manufacturers be on the hook to add yet another system if drivers start tricking them?

Instead of using civil suits or regulatory rulemaking to address this issue, we should really be using criminal law against the people abusing Autopilot. They’re likely already breaking multiple state laws, like reckless endangerment and child abuse (if there are children in the car). Federal and state investigators should be seriously investigating the social media influencers and others who flaunt their abuse of the system, identifying them, and making it clear that it doesn’t fly.

Will people keep abusing these systems? Probably, but there’s no technological barrier people won’t find a way around. Build a fence? People will bring ladders. Lock something up? People will pick the lock or drill the safe. Put passwords on something? People will hack their way around them (but not usually as fast as the “guy in the chair”/boy wonders on TV usually do).

At some point, we have to realize that despite endless safeguards, risk of punishment, and other things to discourage bad behavior, some people will still do bad things.