Autopilot was engaged. It was just after midnight in North Carolina, a time when roads are mostly clear. The driver was watching a film on their phone, and the Model S crashed into a deputy’s vehicle. There’s a reason why you’re not allowed to watch Netflix or YouTube on the Tesla infotainment screen.

The collision occurred as a deputy and a Highway Patrol trooper were on the side of the road responding to a previous crash, according to local news station CBS17. The Model S slammed into the deputy’s vehicle, which then slid into the trooper’s vehicle and pushed both officers to the ground.

Fortunately, no one was hurt. Area sheriff Keith Stone described the scene as a “simple lane closure.”

Tesla Autopilot Design & Driver Responsibility

Tesla’s Autopilot allows a driver to engage autonomous technology that takes over the steering, acceleration, and braking of the car. It is a “driver assist” feature that instructs the driver to remain alert with hands on the wheel when Autopilot is engaged — otherwise known as Level 2 Autonomy.

According to Autopilot Review, Tesla Autopilot is a best-in-class Distance Cruise Control and Lane Centering System meant primarily for freeway use. It does, however, require drivers to keep within the parameters of the system’s capability and to pay attention to traffic.

Tesla clearly states that the consumer is ultimately responsible for using the technology.

The situation in which Autopilot is currently most vulnerable is when stationary objects suddenly appear in the vehicle’s path at freeway speeds. Autopilot will not suddenly brake for stationery objects, since doing so may cause more risk, especially if there is a false positive.

The Tesla owner’s manual includes the following warning:

“Traffic Aware Cruise Control cannot detect all objects and may not brake/ decelerate for stationery vehicle, especially in situation when you are driving over 50 mph and a vehicle you are following moves out of your driving path and a stationery vehicle or object is in front of you instead.”

Tesla’s Safety Records

Tesla vehicles are some of the safest vehicles on the road, with extremely low death and injury rates. Tesla is known for being an extremely innovative company, including rolling out software updates continually over the air and providing consumers with new, advanced Autopilot updates.

But the customer must still respect the features’ limits and the directions of the system.

Tesla received its first Insurance Institute for Highway Safety award with the 2019 Model 3. To earn a “Top Safety Pick,” a vehicle must earn good ratings in the driver-side small overlap front, moderate overlap front side, roof strength and headrest restraint tests, as well as a good or acceptable rating in the passenger-side small overlap test. It also needs an available front crash prevention system with an advanced or superior rating and good-or-acceptable-rated headlights.

The Tesla Vehicle Safety Report offers:

“Because every Tesla is connected, we’re able to use the billions of miles of real-world data from our global fleet, of which more than 1 billion have been driven with Autopilot engaged — to understand the different ways accidents happen.”

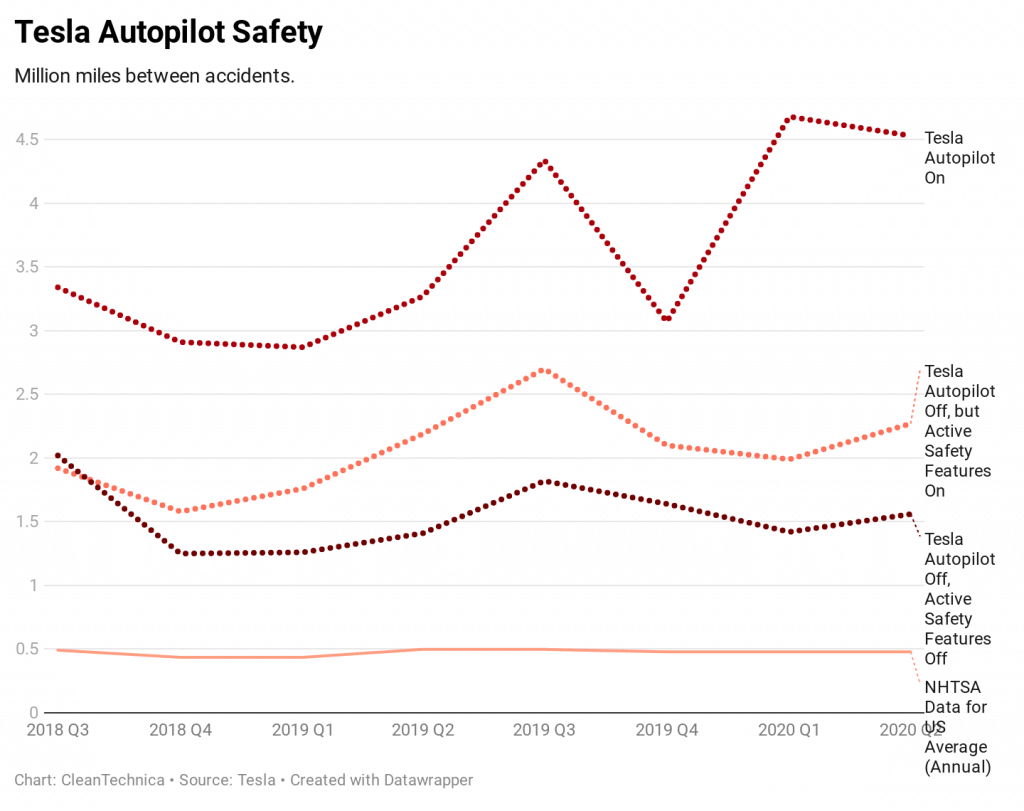

Tesla’s own data indicates that, in the 2nd quarter, 2020, the company registered one accident for every 4.53 million miles drivers had Autopilot engaged. (You decide if the Autopilot is a safety enhancement.)

Every Tesla is equipped with standard active safety features that include automated safety systems, including Lane Assist, Lane Departure Avoidance, Collision Avoidance Assist, and Speed Assist. Consumers can also purchase more advanced features, including “Full Self-Driving” mode (for an additional cost of $8000). Full Self-Driving offers automated lane changes and advanced autonomous driving features such as Navigate on Autopilot (autonomous driving from freeway on-ramp and off-ramp), Smart Summon, and Autopark.

The NHTSA has praised the Autopilot software with helping to mitigate crashes overall.

Is “Autopilot” an Appropriate Name?

“What they name the system has implications for what a driver understands,” says IIHS president David Harkey. Elon Musk has argued for years “Autopilot” is an appropriate name for the features since they do basically the same thing that Autopilot in a plane does for a pilot. Musk, who owned a personal jet that he flew for recreation after selling PayPal, described the origins of the Autopilot name in 2013. “Autopilot is a good thing to have in planes; we should have it in cars.”

The problem comes if drivers expect the system to do more than it can, and also if the system is so good that drivers stop paying attention but not good enough to avoid accidents in all scenarios (see story at top of page). The US National Transportation Safety Board claims that drivers become complacent with partial in-car autonomy. Some drivers tend to use Autopilot outside of prescribed operational design, complicating Autopilot’s use and accentuating the danger factor.

Don’t we all seem to know a new Tesla owner who shows off by saying, “Look! No hands!”? However, for anyone thinking that the system isn’t clear enough about its limits, CleanTechnica CEO Zachary Shahan adds some context from an owner’s perspective:

“No doubt about it: you are not allowed to watch a movie on your phone while driving. I don’t think there’s any confusion about whether you are allowed to do that or not. Tesla will not allow Netflix or YouTube or any games to be on if the car isn’t in park. Everyone with a Tesla knows this. And the reason is clear: they could distract the driver. You cannot watch a movie while using normal cruise control and you cannot watch a movie while using Autopilot. This is clear and well established. The same goes for watching something on your phone, of course. I don’t think any Tesla owner thinks they’re legally allowed to watch a movie on their phone while driving just because Autopilot is on. Again, just the same as everyone knows you can’t watch a movie on your phone just because Cruise Control is on.

“Tesla’s policy, various warnings, and infotainment settings make it absolutely clear the driver has to pay attention to driving.

“As a Tesla owner, I think this idea that is popular in some places that Tesla drivers don’t know this is just rubbish. You get soooooo many warnings to make sure to pay attention to the road, to keep your hands on the wheel, and to remain alert. You’d have to be a piece of celery to not get the point.

“Yes, there are still people who break the law. There are still people who drink and drive. There are still people who do drugs and drive. There are still people who text and drive (I see it all the time in other cars on the road). And there are still people who watch movies and drive (in Teslas as well as other cars). There are some people who steal and break the law in other ways, for that matter. So, there are also people who break the law in Teslas. It’s unfortunate, but it’s not because it isn’t entirely clear what is allowed and what is not.”

A Chronicle of Tesla Autopilot-Related US Accidents

Over-reliance on Autopilot in several crashes has drawn the attention of the National Transportation Safety Board (NTSB) a few times over the years. Have the same kinds of concerns been raised with regards to cruise control in the past? Probably.

- 2016: Model S, Williston, Florida — The NTSB cited the driver’s overreliance on the automated system when Model S collided with upright semi-trailer.

- 2018: Model S, Culver City, California — After the fatal 2018 accident, Tesla commented, “Autopilot can be safely used on divided and undivided roads as long as the driver remains attentive and ready to take control.” The NTSB pointed to several constituents for failing to prevent the crash:

- Tesla: The Autopilot failed to keep the driver’s vehicle in the lane, and its collision-avoidance software failed to detect a highway barrier;

- The Driver: The driver was probably distracted by a game on his phone;

- The California Transportation Department: The CTD should’ve fixed the barrier issue prior to the accident.

- 2019: Model 3, Delray Beach, Florida — Driver’s over-reliance on automation and Tesla’s design: “The company’s failure to limit the use of the system for the conditions for which it was designed.” The driver was killed when the Model S he was driving didn’t recognize a semi truck in cross traffic and had its roof sheered off.

- 2019: NTSB determined that driver error and Autopilot design led to a crash involving a Model S and a parked Southern California fire truck. The accident brief pointed to the probable cause of the Culver City rear-end crash as: “driver’s lack of response to the stationery fire truck in his travel lane, due to inattention and over reliance on the vehicle’s advanced driver assistance system; the Tesla’s Autopilot design, which permitted the driver to disengage from the driving task; and, the driver’s use of the system in ways inconsistent with guidance and warnings from the manufacturer.”

There was one other recent case outside of the United States.

- 2020: Model 3, Taiwan — A driver in Taiwan found himself the object of media attention when traffic cameras caught his Tesla Model 3 failing to stop for an overturned commercial truck. Autopilot was engaged at the time of the collision, the driver claimed.

What’s the take-home message? Pay attention to the road. Keep your eyes on the road. This is advice we all were given when we took driver’s ed as teens, and it’s still relevant today.

Final Thoughts about Tesla Autopilot Design

On the hardware and software front, there is clearly one area where Autopilot still struggles on the highway. It does not accurately identify large trucks stopped in the middle of the road. What is needed so the system of cameras and radar can perceive such large obstacles? Will the coming Autopilot rewrite, which turns “2D” Autopilot code into “3D” Autopilot code, solve that problem?

Will Tesla need to add a mechanism to gauge driver gaze in order to improve its Autopilot safety record?

How will this story develop when the Tesla Network of privately owned ridesharing via Tesla vehicles is opened to the public?

What changes will be required for robotaxis to be practical and safe?

What adjustments and adaptations are needed for Teslas to become capable of full self-driving?

Original Publication by Carolyn Fortuna at CleanTechnica.

Want to buy a Tesla Model 3, Model Y, Model S, or Model X? Feel free to use my referral code to get some free Supercharging miles with your purchase: http://ts.la/guanyu3423

You can also get a $100 discount on Tesla Solar with that code. Let’s help accelerate the advent of a sustainable future.