In this short article, we seek to raise important issues about the philosophy behind “self driving” and its broader implications in aircraft cockpit design. Neither of us are experts in the topic area, as our studies are in the humanities. We both own Teslas and have sought input from a systems engineer about AI and neural nets. The various websites below are included so that you, dear reader, can make your own inquiries — and please include your thoughts in the comments section underneath this article.

What happened to Air France Flight 447? We are now aware of all the drama around AF447. To me, it’s an exemplar of the “consequences-of-certain-types-of-human-automation-interface” and where this interface fails. On the 1st of June 2009, on a flight between Rio de Janeiro and Paris, three pilots lost control of a fully functioning Airbus A330, which, under the manual control of the pilots, stalled and crashed in a horizontal manner at speed in the Atlantic with the loss of 228 lives. It took two years to find the blackbox, located at a depth of 3 km. As of May 2021, Airbus and Air France have been charged with involuntary manslaughter.

Loss of control typically occurs when pilots fail to recognise and correct a potentially dangerous situation, causing a perfectly functioning aircraft to enter an unstable and unrecoverable condition. This is called Controlled Flight Into Terrain (CFIT).

Such a “loss of control” event has, in our opinion, ginormous implications for Full Self Driving in automobiles.

Where does AI and automation come into the picture? Artificial deep neural networks (DNNs) have achieved great success recently in many important areas that deal with text, images, videos, graphs, and so on. However, the blackbox nature of AI and DNNs has become one of the primary obstacles for their wide adoption in mission-critical applications such as medical diagnosis, therapy, law, and social policy. Because of the huge potential of deep learning, also called fuzzy logic, increasing the interpretability of deep neural networks has recently attracted much research attention.

The issue is that once DNN’s are “released into the wild,” they are almost impossible to correct. Tesla’s FSD is one such DNN. Without a human in the loop, such DNN’s can generate disasters, such as the Dutch disease and Robo-Debt in Australia.¹

Furthermore, imagine a scenario next year when HASD (High Automation Self-Driving) comes into play) and it’s a rainy night on a country road and the Self Driving decides to depart for the night. The driver needs must decide, within a few seconds, WTH is going on and what correct actions are required.

Here we see the somewhat bizarre danger in “almost” foolproof automation, like in Air France; because when the automation stops, it does so at the “peak” level of stress with multiple simultaneous dramas and with only a couple of ways safely out of the situation. In this albeit infrequent situation, no automation may be better than partial automation. For instance, in the Air France situation, the pilots could not fly/drive a car manually (i.e., haptically).²

The six levels of autonomous driving — what happens to the haptic? Level 0 — no automation, historic motor vehicles, Level 1 — basic driver assistance (~2014); Level 2 — partial automation (~2017), cruise control, lane divergence warning; Level 3 — conditional automation (~2020); adaptive cruise control, Tesla self-driving on a motorway; Level 4 — high automation (~2023), FSD Beta; Level 5 – full automation (~2025), no steering wheel, etc.

The issue of legal responsibility in cases of accidents involving Level 3 and 4 is a huge one, and potentially one that is slowing the adoption of self driving. Intriguingly, as of March 2022, Mercedes-Benz has now accepted legal responsibility for its autonomous “Drive Pilot” system, which can automatically brake, accelerate, steer within its lane, and turn off highways.

Now we go back to Air France and the design of autonomous flight/driving, especially for L3 & L4. We see that Airbus has two control sticks on either side of the cockpit, such that one pilot does not know haptically (i.e. by feel) what the other pilot is doing, as the joysticks are not connected.

This is in contrast to Boeing cockpit design, where the centre joysticks move in unison, as they are mechanically linked. So, yes, the pilot/driver is still in the loop, but at the beginning (DeLorean) or at the end (Tesla³)? The difference matters, and matters a lot.

Where is the driver in the loop?: If Tesla’s philosophy of automation is to take the driver out of the loop, the driver at best becomes an extension of the car as with Airbus. With DeLorean/Boeing, the car/plane is not designed to become a “shield” to the outside world/road conditions. (See 16–18 minutes into this 30 minute podcast). DMC CEO Joost deVires explains the difference between being driven by the car and driving the car — whether a Tesla, Mercedes, Volkswagen, or DMC. However, will DeLorean be stuck at L3?

While Level 5 would mean virtually no human error accidents, getting there in the next 3–5 years will probably be quite a rough period, with accidents at the interface of human | machine control through the handover of one to the other as humans are taken out of the loop. We keenly await DeLorean’s take on automaticity.

¹ DNN are a form of AI and are loosely modelled on the human brain. They can also undertake “machine learning,” and “machine ethics” enter stage left — the “hive mind” in charge of “moral machines.” They are qualitatively different to more commonly known everyday control systems. A control system manages, commands, directs, or regulates the behaviour of other devices or systems using basic + or – control loops (e.g., thermostat or engine governor). In this article, we are talking about the former (DNN).

² Incidentally, a key reason for the early success of the Luftwaffe in WW2 was that due to the Treaty of Versailles, which limited Germany militarily, so the pilots had to go to learn in gliders — the essence of hands-on, haptic, manual flight. This would be so necessary in the coming dogfights.

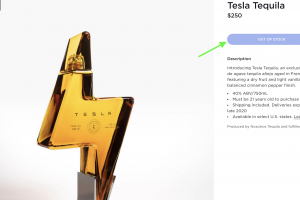

³ Elon Musk has said in a recent interview, “if a driver needs to make a decision, the car should do that for you.”

Dr Paul Wildman is a retired crafter and academic. He was director of the Queensland Apprenticeship system for several years in the early 1990s and is enthusiastic to demonstrate the importance of craft, peer-to-peer manufacture, collaboration and “our commons” in social, economic, and technological innovations such as EVs. Paul is long on Tesla and trying to prove a Fox Terrier can be trained. See Paul’s crafter podcasts: The paulx4u’s Podcast.