It’s fascinating to see systems like Tesla’s Full Self Driving (FSD) beta as a kid who learns while we monitor and keep things safe. We think finally, the child will grow up to be like us, and maybe even better than humans when it comes to driving.

Numbers vs Ideas

The whole point of machine learning and artificial intelligence (in general) is to use and get ideas to be processed by machines that only process numbers (computers).

Although many problems and games in the real world, such as Chess, can be reduced to equations and then fed through computers, something as simple as, “is that a stop sign or a yield sign,” is not easily minimized to mathematics. All the various lighting conditions, viewing angles, sizes, wear and tear, front-facing plants that grow part-way, and many other things carry confusion. This would take an impossible number of rules to cope with “if, then” for regular computer programming. You’d have to program for nearly every scenario, and would still have gaps in what the slow, bloated software could do.

Believe it or not, in the 1980s, this strategy was attempted. It is possible to break down several complicated problems into lots of small rules that can be programmed. If you program a computer system with appropriate knowledge and rules, you can create an “expert system” that can assist a non-expert to make decisions as an expert would do. The issue was that human experts’ time is precious, and it’s costly to get them to work with developers. There was still a lot of resistance from highly paid experts to the development of machines that could eventually replace them, and only by paying them much more could this resistance be overcome.

And when finished, not every problem experts could solve could be solved by software patches, so you are now paying twice: once for the experts to help develop the system, and again when the system falls short.

Machine learning, on the other hand, is not supposed to involve expert teachers. Instead of using complex rules manually applied by humans to the software, machine learning uses simple information provided by example data to modify itself and match the correct results of the example data. You send it the input data and the desired results, more simply put, and it finds ways to ensure that it performs calculations within itself to get it correct. Then in principle, it will send the correct outputs when you bring new data in.

One issue is that machine learning is just as good as the data that it gets. For example, when Amazon decided to automate part of the recruiting process, they figured this out the hard way. The machine learning technology was ready to review new resumes and make hiring decisions after feeding its machines with 10 years of resumes and ended up being hired, but Amazon soon discovered that the software was biased towards women. While the HR workers of Amazon were certainly not deliberately biased against women, the machines modified the software to suit previous hiring practices and with it a stigma against hiring women.

This occurs because nothing is explicitly learned through artificial neural networks. They measure lots and lots of probabilities, and then feed those probabilities into other parts of the network that measure more probabilities. The weights of the complex system (the significance of each probability) are balanced until the inputs give the correct outputs most of the time. There’s no awareness, experience, or conscience in the network.

Rich example data can provide good results, but the only thing considered by the artificial neural network is if the result matches the example data it was modified to suit.

Human Thinking

Over the millenniums, much work has gone into human thought. It is of utmost importance to know how human beings learn and adapt, and how we make decisions. The more we learn about ourselves, the more we can deal with tough circumstances.

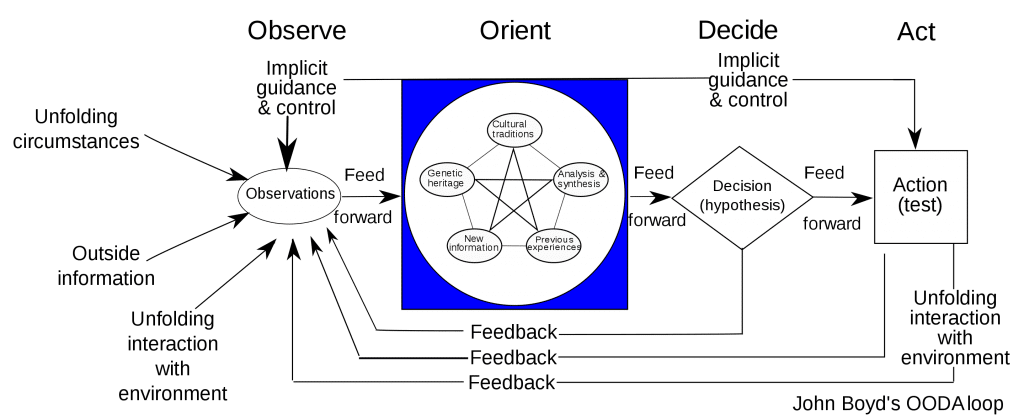

There’s a lot more going on under the hood, when human decision-making uses something like example evidence (our past experience and training). Our perceptions are fed into a “machine” that is far more complex. We search for trends that not only fit our past experience, but also new knowledge we have just heard, information from our society and any religions in which we might have been raised, and we are able to use creativity before acting to incorporate these distinct things. U.S. Colonel John Boyd of the U.S. Air Force put all of this in his famous “OODA Loop.” It’s a loop because it’s a mechanism that occurs over and over again.

With minimal knowledge, we are also able to come up with good decisions in a hurry. Using what Malcolm Gladwell calls “thin slicing” in Blink, people have shown the potential, after careful thought, to sometimes make better decisions when hurried than they often do. Our ability to recognize appropriate data easily, often subconsciously, leads to “gut instincts” or “I have a bad feeling about this.”

We have Theory of Mind, too. As social animals, it is incredibly important to know what other people are probably thinking. Not only can we make choices based on what others think, but what they will think if we act in a certain way. We also think about what others think that we are thinking, or what others think that we are thinking. We also think about what other people think that we think that they think, and what we think that they think. It’s difficult, but it comes naturally to us because cooperation is vital to our survival.

On the other hand, our ability to make decisions can all be misdirected by prejudices, bad cultural knowledge, mental illnesses, adrenaline, and deliberate deception.

How This Pertains To Autonomous Vehicles

You offer the program photos of curbs and roads for self-driving vehicles, and it changes itself to better categorize the curbs and roads, so it can determine where to drive and where not to drive (among many other things). The network is not concerned about what other drivers are thinking (mind theory), nor is it thinking about what other drivers assume it may be thinking. It’s okay to slowly overtake another driver in the fast lane, but if a line of cars starts getting stuck behind us, it may not be okay. We realize that others can become angry and upset, and therefore change our acts to prevent confrontation. The program does not have “gut feelings.” It does not care about deceiving the AI systems of other vehicles. It does not understand signs that the driver of another car is frustrated and teetering toward anger in the other lane.

Self-driving cars don’t look at red and blue lights blinking in the mirror asking if they could represent a fake officer. As needed by law, it just pulls over, and doesn’t think to call 911 to first confirm that the weird-looking cop car is legitimate.

Some of the correct human reactions to these circumstances may be in the mimicry training data, but others are not.

On the other hand, computers never gets tired. It never gets drunk. It doesn’t have poor office days, traumatic breakups, or has road rage. It never checks social media while driving, and does not distract or send messages from phone calls.

In the long run, which device would be safer all comes down to whether a computer that is limited but reliably does what it does is safer than less limited but imperfect humans.

Want to buy a Tesla Model 3, Model Y, Model S, or Model X? Feel free to use my referral code to get some free Supercharging miles with your purchase: http://ts.la/guanyu3423

You can also get a $100 discount on Tesla Solar with that code. Let’s help accelerate the advent of a sustainable future.