Five Texas law enforcement officers are suing Tesla for monetary relief of over $1,000,000 after a Tesla Model X collided into the cops and their vehicles. The driver operating the Tesla Model X was inebriated at the time, based on the police report.

On February 27, 2021, a Tesla Model X, reportedly with Autopilot engaged, crashed into five police officers on the Eastex Freeway in Texas. The plaintiffs claim that Tesla’s safety features failed to detect the officers’ cars because of “manufacturing defects.”

The TX police officers are also suing Pappas Restaurant INC., which owns the Pappasito’s Cantina, where the Tesla Model X driver was served alcohol before the accident. The police report from the crash stated that the driver was arrested “on suspicion of intoxication assault.”

TESLA AUTOPILOT VS. FSD CONFUSION CONTINUES

Any Tesla owner or supporter who reads the lawsuit will quickly identify the lawsuit’s inaccuracies about Autopilot. The most obvious flaw in the lawsuit would be how it confuses Tesla Autopilot with Tesla’s Full Self-Driving suite.

The lawsuit lists supposed Autopilot features to support the plaintiff’s claim that Tesla inaccurately marketed its driver assistance system as safe. The lawsuit claims it compiled the list of Autopilot features from Tesla’s website. The list includes the “Navigate on Autopilot” feature, which is currently listed under the Full Self-Driving suite.

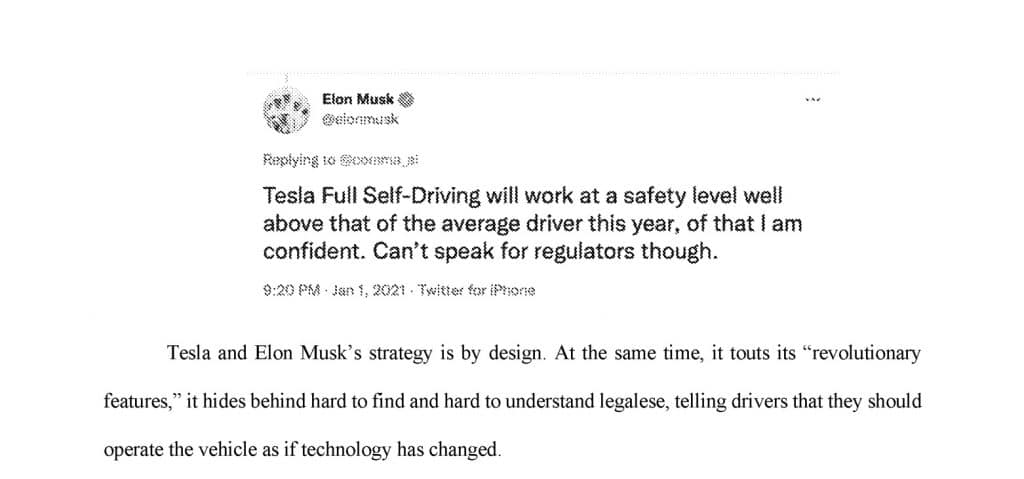

The lawsuit also includes tweets from Elon Musk. In one instance, the lawsuit states: “Tesla, Inc. and its CEO, Elon Musk, have also repeatedly exaggerated the actual capabilities of Autopilot, resulting in the public, including first responders, and Tesla drivers being put at a significant risk of serious injury or death:”

Then the lawsuit includes the following screenshot of an Elon Musk tweet, which clearly references FSD.

This recent lawsuit by five Texas cops reveals the ongoing confusion about Tesla’s safety features, particularly between Autopilot and Full Self-Driving. It also shows the continuous fear, uncertainty, and doubt (FUD) Tesla critics sow about its safety systems.

THE IRONY OF TESLA AUTOPILOT CRITICISM

Ironically, Tesla created Autopilot and its Full Self-Driving suite to prevent people from misusing their vehicles, such as in this case, where the driver operated a Tesla Model X irresponsibly. Critics against Tesla Autopilot and Full Self-Driving constantly blame the company for drivers misusing their vehicles, as shown by this recent lawsuit against the company.

During the last earnings call, Elon Musk explained, once again, why software like Autopilot and Tesla FSD are necessary for modern vehicles.

“At scale, it will have billions of miles of travel to be able to show that it is the safety of the car with autopilot on is 100% or 200% or more safer than the average human driver,” Musk said. “At that point, I think it would be unconscionable to not to allow autopilot because the car just becomes way less safe. It would be sort of like shake the elevator analogy. Back in the day, we used to have elevator operators with a big switch. They operate the elevator and move between floors.”

“But they get tired or maybe drunk or something or distracted and every now and again, somebody would be kind of sheared in half between floors. That’s kind of the situation we have with cars. Autonomy will become so safe that it will be unsafe to manually operate the car relatively speaking. And today obviously we just get an elevator where we press the button for which floor we want, and it just takes us there safely,” he explained.

A copy of the lawsuit can be seen below.