Key Takeaways

- Tesla has restarted work on its Dojo 3 AI training supercomputer now that the AI5 chip design is stable.

- Elon Musk confirmed the update on X, announcing hiring for engineers on what he calls the world’s highest-volume AI chips—email AI_Chips@Tesla.com with 3 bullet points on toughest problems solved.

- Tesla’s AI roadmap: AI4 achieves self-driving safety above humans; AI5 makes vehicles “almost perfect” and boosts Optimus; AI6 targets Optimus and data centers.

- AI7/Dojo 3 designed for space-based AI compute.

- Resolves past confusion: Tesla paused Dojo last year to avoid splitting resources, favoring AI5/AI6 clusters, but now AI7 serves as dedicated Dojo successor.

- AI7, AI8, and AI9 chips to be developed in rapid 9-month cycles for quicker deployment.

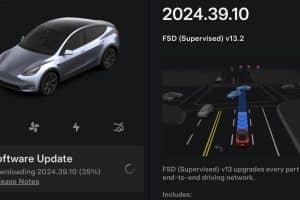

In a bombshell update that’s sending shockwaves through the AI and automotive worlds, Tesla CEO Elon Musk has confirmed the resurrection of the company’s long-dormant Dojo 3 project. Announced via a post on X just yesterday, this move comes hot on the heels of stabilizing the design for Tesla’s next-gen AI5 chip, signaling a strategic pivot back to in-house supercomputing prowess.[1][2] As someone who’s followed Tesla’s AI journey since the first AI Day in 2021, this isn’t just a project reboot—it’s a declaration of independence from Nvidia dependency and a bold bet on vertically integrated AI hardware that could redefine autonomy, robotics, and even space tech.

A Quick Recap: What Exactly is Tesla’s Dojo?

For the uninitiated, Project Dojo is Tesla’s custom-built AI training supercomputer, optimized for crunching petabytes of real-world video data from its fleet of vehicles. Unlike general-purpose GPUs from Nvidia, Dojo’s architecture—powered by proprietary D1 chips (and successors)—is laser-focused on neural network training for Full Self-Driving (FSD) and Optimus humanoid robots. Announced at AI Day 2021, the initial Dojo system promised over 1 exaFLOP of compute at BF16 precision, with massive on-chip SRAM (1.3 TB per tile) and high-bandwidth interconnects to minimize latency.[3]

Key specs from early Dojo iterations:

- D1 Chip: 645 mm² die with 576 bi-directional SerDes channels for 8 TB/s edge bandwidth.

- Training Tile: 6 tiles per system tray, scaling to cabinets and ExaPODs (up to 1,062,000 cores).

- Efficiency Edge: 4x better AI training perf/$ than competitors, with 5x lower carbon footprint.[4]

Dojo wasn’t meant to replace Nvidia entirely but to complement it, handling Tesla-specific workloads like video-based computer vision where standard GPUs falter.

The Pause: Strategic Pivot or Temporary Setback?

Fast-forward to 2025: Rumors swirled as Tesla reportedly disbanded the Dojo team and shifted focus to “Cortex,” a massive Nvidia H100/H200 cluster at Giga Texas (100k+ GPUs, 500+ MW TDP).[5][6] Musk himself explained the rationale on X: Splitting resources across architectures didn’t make sense. Instead, clustering AI5/AI6 chips could mimic Dojo’s scale while simplifying cabling and costs by orders of magnitude. He even nodded to AI6 as a “spiritual successor,” calling Dojo 2 an “evolutionary dead end.”[6]

Reasons for the 2025 Hiatus:

- Nvidia Shortages Eased: Tesla ramped up H100 equivalents to 67k+ for training FSD and Optimus.

- Convergence Strategy: AI inference chips (for cars/robots) evolving to handle training at scale.

- Leadership Changes: Key figures like Ganesh Venkataramanan and Peter Bannon departed.[7]

This wasn’t abandonment—it was pragmatism. Tesla invested billions in AI infra, including $500M for a Buffalo Dojo cluster equivalent to 10k H100s.[8]

The Catalyst: AI5 Chip Hits “Good Shape” – Dojo 3 Rises Again

Enter 2026: Musk’s X post changes everything. “Now that the AI5 chip design is in good shape, Tesla will restart work on Dojo3.”[1] AI5, nearly complete and 10x more powerful than HW4/AI4, unlocks this revival by providing a stable foundation. No longer splitting focus—Tesla’s converging on a unified chip family that scales from vehicle inference to superclusters.

Musk’s hiring call is pure Elon: “If you’re interested in working on what will be the highest volume chips in the world, send a note to AI_Chips@Tesla.com with 3 bullet points on the toughest technical problems you’ve solved.”[1] This isn’t hype; with millions of Teslas and potentially billions of Optimus units, volume will dwarf even Nvidia’s data center kings.

Decoding Tesla’s AI Chip Roadmap: From Roads to Orbit

Musk laid out the future in crystal-clear terms:

- AI4 (Current HW4): “Self-driving safety levels very far above human.”[9]

- AI5: Vehicles “almost perfect,” massive Optimus boost. High-volume production ~18 months out, fabbed at Samsung/TSMC.[10]

- AI6: Optimized for Optimus robots and data centers—bridge to supercomputing.

- AI7/Dojo 3: Space-based AI compute. The game-changer.[2]

And the cadence? A blistering 9-month cycle for AI7, AI8, AI9—faster than Nvidia/AMD’s yearly releases.[10] This rapid iteration, fueled by Tesla’s data flywheel, positions them to outpace commoditized silicon.

What Does “Space-Based” Mean? Hints point to Starlink/SpaceX synergy: Orbital data centers for low-latency global AI inference/training? Processing satellite imagery or enabling constellation-wide autonomy? It’s Musk’s multi-planetary vision in action—Dojo clusters in space, radiation-hardened for LEO.[11][12]

Hiring Blitz: Building the Dream Team

Tesla’s talent hunt underscores ambition. Email AI_Chips@Tesla.com with your top 3 technical wins—expect rigorous vetting for ex-Nvidia/AMD wizards. This could accelerate Dojo 3 to “sooner than expected,” per Musk.[2]

Pro Tip for Applicants:

- Highlight video NN training, custom silicon, or high-bandwidth interconnects.

- Quantify impact: “Solved X problem, delivered Y perf gain.”

- Tesla values “hardcore” problem-solvers—Musk’s word.

Insights and Opinions: Why This Changes Everything

As a Tesla AI watcher, here’s my take:

- Nvidia Curveball: Tesla’s volume + custom design erodes Nvidia’s moat. Musk’s “highest volume chips” claim? Believable with Robotaxi fleets.

- Optimus Acceleration: AI5/6 supercharges humanoid scaling; Dojo 3 trains the army.

- Economic Edge: In-house silicon slashes costs 4x vs. GPUs, critical for $20k Optimus.

- Risks: Fab delays (TSMC bottlenecks), talent wars. But Tesla’s execution track record? Unmatched.

- SpaceX Crossover: Space-based compute = Starship + Dojo for Mars AI? Game over for Earth-bound rivals.

Investment Angle:

- TSLA bulls: This validates AI as 10x auto revenue.

- Bears: Still execution risk, but roadmap clarity boosts confidence.

Advice for Enthusiasts/Engineers:

- Monitor Giga Texas expansions—next Cortex phase incoming.

- Dive into PyTorch on Dojo (no CUDA needed).[3]

- Job hunters: Act fast; AI_Chips@Tesla.com is your ticket.

Wrapping Up: Tesla’s AI Empire Takes Flight

Dojo 3’s revival isn’t nostalgia—it’s evolution. From paused project to space-faring powerhouse, Tesla’s blending cars, robots, and orbits into an AI juggernaut. With AI5 stable and 9-month sprints, expect fireworks. Elon doesn’t tweet idly; this is the blueprint for world domination.

Stay tuned—I’ll update as Musk drops more breadcrumbs.